To start off our computer science fundamentals series, we will cover complexity analysis and Big O. Prior to understanding data structures and algorithms, it's important to understand how code is analyzed for efficiency in software engineering. This will come in handy later in deciding which data structure and algorithm is best to solve a problem.

What is complexity analysis

Prior to diving into computer science, I used to think all code weighed the same, meaning, all code completed the same task within the same timeframe. Lo and behold, complexity analysis has proven this is not the case! Although two different programs given the same number of inputs provide the same output, one typically gets the job done more efficiently. So, to put it simply, complexity analysis is the method by which we measure this efficiency.

What makes code efficient

Now, what exactly is efficiency? Efficiency is broken down between time and space. Key things to understand:

Time complexity: measures the amount of time it takes a program to complete. The faster your code, the better.

Space complexity: measures the amount of space (memory) a program takes up as it completes. The less space your code consumes, the better.

Both are important but we are generally more concerned with time complexity.

Big O analysis

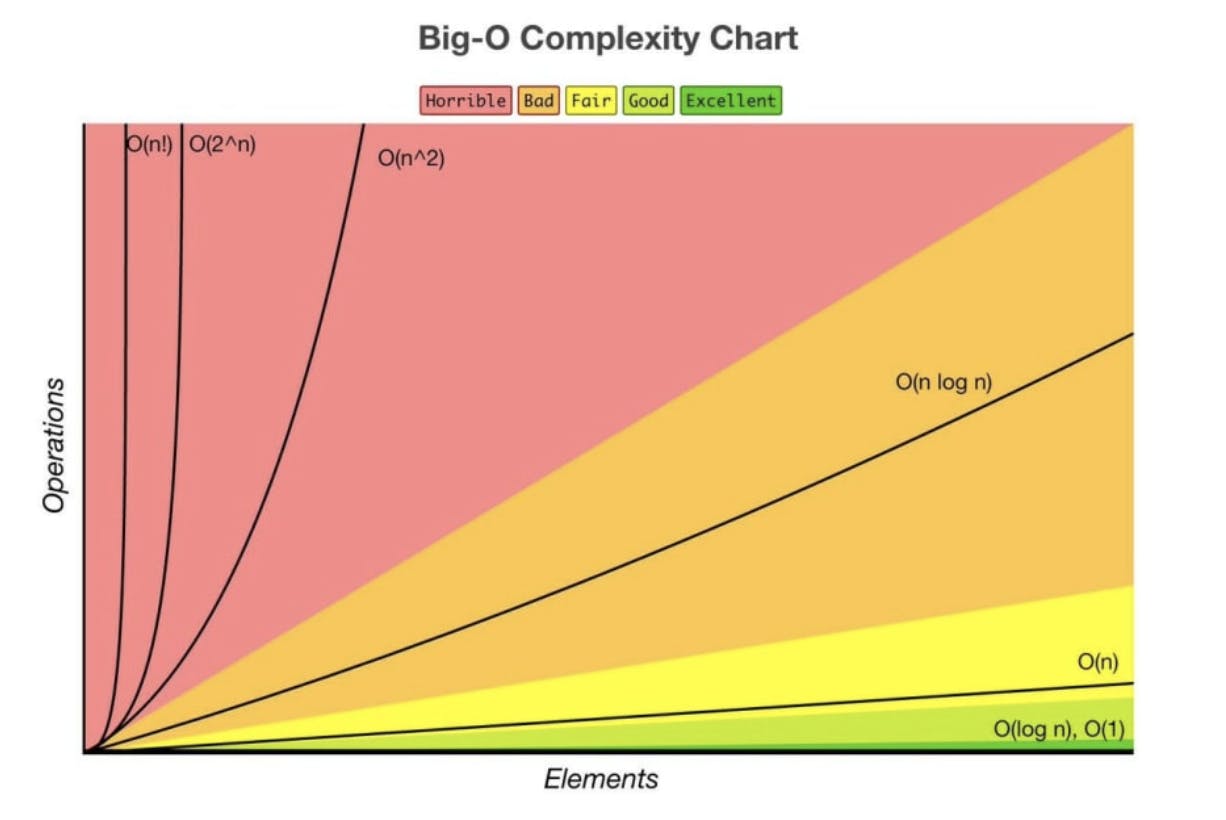

If complexity analysis is the method by which we measure the efficiency of code, we can then think of Big O as the unit by which we do this measuring. Big O analysis is used to rank an algorithm's performance in relation to the growth of input size. In other words, as our input size gets closer to an infinite number (obviously very large), how efficient does our algorithm remain?

Big O notation

Note: n represents input size

O(1) - Constant: No matter the size of n, our algorithm will take the same amount of time to execute.

O(log n) - Logarithmic: Time goes up linearly, while n goes up exponentially. Meaning, if it takes 1 second to compute 10 elements, it will take 2 seconds to compute 100 elements, etc.

O(n) - Linear: Our algorithm's performance will grow in direct proportion as our input. For example, if it takes 1 second to compute 1 element, it will take 2 seconds to compute 2 elements, 5 seconds to compute 5 elements, and so on.

O(n log n) - Loglinear: Algorithms that repeatedly divide a set of data in half, and then process those halves independently.

O(n²) - Quadratic: As the size of n increases, our algorithm's time to complete will increase exponentially.

We won't cover the others in the chart because as you can see, they're no better than the ones we've already covered anyway so if you ever find yourself in red, it's better to find a better solution to the problem at hand.

Calculating Big O

Let's calculate the Big O solution to Two Sum. Given an array, we must find the two numbers that add up to a given target. Our result should return their index, if there is no sum, return an empty array.

This solution utilizes a brute force approach.

1. function twoSum(arr, target){

2. for(let i=0; i < arr.length; i++) {

3. for(let j=i+1; j < arr.length; j++) {

4. if(arr[i] + arr[j] === target) return [arr[i],arr[j]]

5. }

6. }

7. return []

8. }

Line 2: This for loop traverses our arr array. Since it loops n times for each n element in our array, this line runs in O(n).

Line 3: This second for loop will always run one index ahead of the first loop. This way it's able to sum the numbers of index 1 and index 2 to check if they add to our target. So, for every item in the array, the second loop will traverse that one item n times in order to compare all numbers to that one number before moving to the second index and doing the same all over again. In other words, these two loops are executed in n x n resulting in O(n^2).

Line 4: This line will take the same time to execute no matter our input size. It's O(1).

Line 7: This is a simple statement not relying on n. It also runs in O(1).

Final verdict: Time: O(n^2) | Space: O(1) O(n^2) dominates all other runtimes when it comes to the worst-case scenario, therefore this solution runs in O(n^2).

This next solution utilizes the two-pointers technique.

1. function twoSum(arr, target) {

2. arr.sort((a,b) => a-b)

3. let start = 0;

4. let end = arr.length-1

5.

6. while(start < end) {

7. if (arr[start] + arr[end] === target) return [arr[start],arr[end]]

8. else if ((arr[start] + arr[end]) < target) start++;

9. else end--;

10. }

11. return []

12. }

line 2: The sort function traverses the array in order to sort it in increasing order. This line runs in O(n).

line 3: Initiating the start variable runs in O(1).

line 4. Initiating the end variable runs in O(1).

line 6. This while loop runs as long as start is less than end. For each loop, we will traverse the array checking to see if our loop statement is still truthy. This line runs in O(n).

line 7-8: These statements run in O(1).

Final verdict: Time: O(n) | Space: O(1)

O(n) dominates all other runtimes in this solution when it comes to the worst-case scenario, therefore this solution runs in O(n). This solution is obviously far superior to the first because it is more efficient.

Summary

Time complexity + space complexity = code efficiency

Time complexity measures the amount of time a program takes to complete. The faster your code, the better.

Space complexity measures the amount of space (memory) a program takes up as it completes. The less space, the better.

Big O is used to rank an algorithm's performance in relation to the growth of input size. As our input gets larger, how efficient does our code remain?

Study your big O notations.